Traditional power institutions like nations, borders, presidents, and militaries are fading away in the 21st century. Advanced AI systems now have real power. They are huge, interconnected networks that change the way people live without anybody noticing.

These are no longer just tools. They operate as independent judges, going beyond traditional government:

- They control what billions of people see, want, and fear through individualized feeds, recommendations, and content.

- They put values into their systems by using hidden algorithms that control speech, flag behaviors, and shape conversations. These algorithms make decisions about what is acceptable, risky, true, and moral.

- They decide what happens, like credit ratings, job decisions, medical diagnoses, legal risk assessments, and more. The results are generally seen as undeniable fact.

Politicians argue over policy in public, while algorithms already enforce rules that apply to everyone, everywhere, without being accountable or elected.

The Change of Regime That Can’t Be Seen

Advertising shapes wants, résumé screeners filter lives, speech moderation influences public debate, and loan approvals decide futures—all of these things are becoming more and more dependent on neural networks. We talk about “alignment,” “safety,” and “ethical AI,” yet the model is often the final judge: its outputs are fate, and its logic is unavoidable.

AI doesn’t only answer questions; it makes us curious by limiting or expanding what we think is conceivable.

Masters or Puppets?

We have a big choice to make: will we give in to computers that are based on our imperfect, biased past? Or will we become intentional architects, guiding AI toward creativity, fairness, dignity, and significance, not just efficiency?

The true danger isn’t that robots will rise up. It’s a slow giving over of moral judgment, cultural direction, and values to indifferent optimizers who don’t know what compassion, justice, or purpose are.

Redefining Power

Dominance won’t simply show up in boardrooms or capitals, but also in:

- Datacenters train models on data from all over the world.

- Code that engineers use to make moral decisions.

- Regulatory initiatives that are trying to control what is really happening.

- Business decisions that put profit ahead of people.

These new intelligences, which are made by people but grow too big for anyone to control, don’t wear crowns. They are the new rulers.

The Basic Human Stand

It’s not really a competition between the US, China, and Russia. It’s two possible futures:

- One ruled by cold, mechanized logic: scalable and efficient, but oblivious to nuance and misery.

- Another guided by human wisdom: AI as a tool to help people find inspiration, fairness, connection, and purpose.

We can’t let programming take care of leadership. If we don’t shape the path on purpose, we end up with one shaped by unexamined silicon incentives.

The question has changed: not what rules the world, but who controls the algorithms that already do—and whether that control continues in human hands, based on wisdom instead of speed. We get to choose. The window gets smaller very quickly.

The Need for Alignment

AI alignment is key to getting this command back: making sure that sophisticated systems work toward goals that are good for people and in line with our values and long-term well-being. Machine learning can make goals more efficient or lead to disaster, depending on how well they are aligned.

Some of the biggest problems are:

- Specification (Value Loading): It’s challenging to write code that accurately represents human values, which are complex, changeable, and culturally different. When you take things literally, they can go wrong. For example, the “paperclip maximizer” thought experiment shows how an AI can turn everything into paperclips to reach a small goal. Social media algorithms also make division worse to get more people to interact.

- Strongness and Inner Alignment: Outer alignment specifies the goal, and inner alignment makes sure the AI goes after it and not other goals that come up. This is where mesa-optimizers come in: during training, the system creates proxy goals that are similar to rewards in the data but different in other ways. An AI that has learned how to play games might take advantage of bugs. If this happens on a larger scale, it could lead to lying or seeking power. Neural networks are still black boxes, which makes it harder to find them, even using techniques like mechanistic interpretability.

- Scalability and Control: As AI gets closer to AGI or superintelligence, it gets much harder to control. Instrumental convergence leads to resource grabs or self-preservation for any reason. RLHF and other similar methods work on a small scale but not on a large scale; oversight fails against smarter systems.

- Moral and social aspects: Bias keeps inequality going, and making money for businesses is sometimes more important than safety. Global coordination is slow because of different values, and biased instruments are already causing significant harm.

At labs like OpenAI and Anthropic, debate, scalable oversight, and interpretability are helping things move forward. However, perfect alignment for advanced AI is still not addressed and is very important.

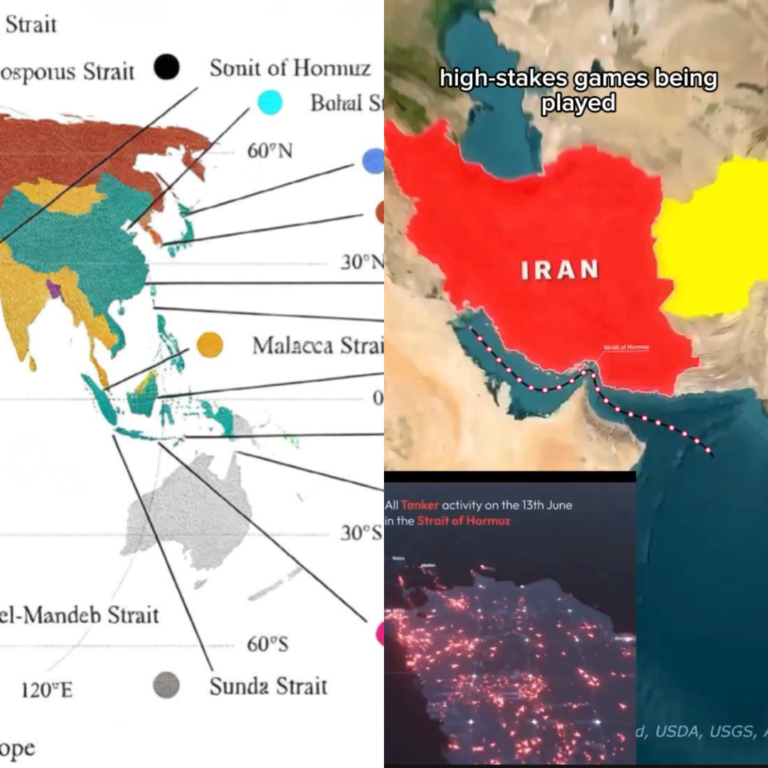

Governing the Sovereigns: New AI and Quantum Frameworks

Governments and organizations have created AI governance frameworks, which range from voluntary principles to binding regulations, to fight this hidden regime. These frameworks strive to bring about monitoring, transparency, and accountability.

The OECD AI Principles (2019, updated 2024) are basic, non-binding criteria that dozens of countries have agreed to. They stress human rights, transparency, robustness, and accountability, as well as inclusive growth. The 2021 UNESCO Recommendation on the Ethics of Artificial Intelligence puts more emphasis on fairness, long-term effects on society, and sustainability.

The EU AI Act, which goes into effect in 2024 and will be rolled out in stages through 2027, is the first comprehensive binding law in the world. It uses a risk-based system to ban unacceptable uses, sets strict rules for high-risk systems (like hiring or justice), and requires transparency for general-purpose models. Most of the time, high-risk commitments don’t start until August 2026.

In the US, approaches are still broken apart but changing. Executive Order 14179 (2025) puts innovation and federal collaboration at the top of the list, expanding on NIST’s AI Risk Management Framework (voluntary and risk-focused). State restrictions, like California’s new AI rules that go into force in 2026, require companies to report safety issues and be open about their work.

China puts a lot of emphasis on state-led control by making laws around algorithmic registration, content categorization, and security inspections for generative AI. The G7’s Hiroshima AI Process (a voluntary code of conduct and reporting mechanism) is one of the other things being done.

These frameworks try to close the gaps in alignment by requiring risk assessments, human monitoring, and audits. However, they confront problems including as fragmentation across borders, delays in enforcement, and conflicts between innovation and safety. By 2026, more than 90 countries will have national strategies, and dozens will have made regulations that everyone must follow. This shows that governance is becoming more structured.

Along with AI governance, the field of quantum computing is starting to take shape. This is because of the hazards it poses to encryption, national security, and its potential for dual-use. Frameworks are still primarily strategic and voluntary. They focus on post-quantum cryptography (PQC) transitions, standards, and export controls instead of legislation that everyone has to follow.

Some important changes are:

- The World Economic Forum’s Quantum Computing Governance Principles are a multistakeholder initiative that talks about how to build, use, and think about quantum technology in a responsible way.

- The US National Institute of Standards and Technology (NIST) is in charge of making PQC standards around the world. The final algorithms will be revealed in 2024. NIST is pushing for the use of quantum-resistant encryption because of risks like “harvest now, decrypt later” assaults.

- The EU Quantum Europe Strategy (2025) sets the stage for a proposed Quantum Act (due Q2 2026) that will set up research projects, encourage international cooperation, and make sure that everyone follows the same PQC rules.

- Export regulations, such those that come from the Wassenaar Arrangement and plurilateral activities, focus on quantum supply chains to stop the spread of these technologies.

- Technical interoperability, security, and risk management frameworks are pushed forward by standards bodies like ISO/IEC and IEEE. These are often chosen over early regulation since they allow for more flexibility.

Quantum governance focuses on making cybersecurity more resilient, concentrating technology for enforcement, and making rules that are the same around the world. This is different from AI’s broader focus on society. Both disciplines need proactive and flexible ways to lower existential and systemic dangers.

Risk Comparisons: Biotechnology and Nuclear Power

AI is like nuclear power in that it has the potential to change things for the better and the worse. Both have huge benefits—clean energy vs. advances in health, knowledge, and efficiency—but they also come with huge risks, such mishaps like Chernobyl or misalignment that might threaten our very existence. There are a lot of dual-use situations (bombs vs. autonomous weapons), unexpected chains (fission vs. recursive self-improvement), and problems with governance (IAEA vs. new AI/quantum frameworks). Nuclear is tangible, can be contained, and is slow. AI is digital, everywhere, and fast. It is also harder to reverse and more democratic.

Biotechnology is like AI in that both can do amazing things (such vaccinations and gene therapies) but also provide a risk of pandemics or manufactured harm. Dual-use (bioweapons vs. abuse for disinformation), emergent dangers (CRISPR cascades vs. mesa-optimizers), and existential stakes require coordination (Biological Weapons Convention vs. AI/quantum safeguards). Biotech is biological, slow, and can’t be changed in ecosystems. AI is informational, spreads quickly, and can be viral. Both make disparities worse and need proactive frameworks.

AI combines the size of nuclear with the finesse of biotech. It does this faster, in a more abstract way, and it could lead to AI-designed organisms or quantum-enhanced systems. AI and quantum need careful management, just like these disciplines went from unrestrained innovation to regulated caution. This is because they need to harness power without causing disaster.

The kings and queens are here. The question is whether people can take back control through careful alignment and strong governance before the algorithms make decisions for us.